ChatGPT is a Test for the Graders, not the Students

The problem isn't ChatGPT's effect on the students. The problem is going to be ChatGPT's effect on the graders. And not just in colleges, but in real businesses that matter.

Jonathan V. Last of the Bulwark has a controversial take/modest proposal in the pages of the Bulwark today: let students cheat with ChatGPT.

The point of assigning papers isn’t to teach kids how to write—it’s to test to see if kids have done the reading. And the reading is the most important part of the educational process, followed closely by the absorption of whatever insights the professors can impart.

Writing papers is just a way of spot checking that process in order to assign grades to it. Which is another way of saying: busy work.

So who cares if kids use ChatGPT to break that paradigm?

If kids want to skip the reading and not learn anything from a course, that’s on them. They will either be the poorer for it later on. Or they won’t.

I believe this is a complete reversal of what we need to be worried about. I’m not at all worried about ChatGPT’s effect on the students, because it’s very easy to fulminate about the students. They don’t have power yet. That’s why they’re students.

I’m terrified about ChatGPT’s effect on the graders.

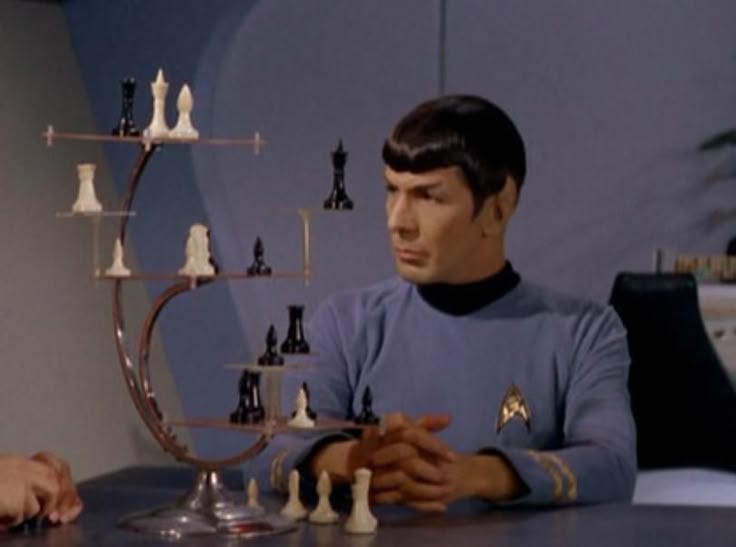

Historically, we've debated what 'artificial intelligence' would consist of since Rossum's Universal Robots. We've known that the answer has always been 'something it can't do yet' - what exactly that something is and how we should measure it varies. In Star Trek: The Original Series and 2001, the intimidating thing the computer does to prove that it is smarter than any of the human John Henries arrayed before it is play chess well; computers couldn't play chess well at the time, and therefore perhaps a sign that we had finally reached artificial intelligence was a computerized chess champion. Then fellow Substacker Garry Kasparov was annihilated in the 1990s by Deep Blue - but we clearly still weren't at artificial intelligence yet. So, whatever the boundary was, it wasn't chess.

When chess failed, some of us briefly retreated to Go (which would fall in the 2010s), but most people fell back on WWII hero Alan Turing's idea. Alan Turing quite logically pointed out that you couldn't tell if another human being you were talking to was intelligent; but since you're capable of talking to them, you extend to them the 'polite convention' that they are intelligent beings. Therefore, an AI that was capable of successfully posing as a human being when being interviewed by a trained interlocutor and having the interlocutor be no more successful than random chance for determining whether they were talking to a human or not would 'pass the Turing test', and therefore probably be artificially intelligent.

ChatGPT has destroyed the Turing test the way that Deep Blue destroyed chess as a marker of intelligence. We're there. And finding ourselves there, we still find that we're not at 'artificial intelligence', whatever that is.

But the problem is where we find ourselves. You see, being a supervisor of anyone technical or scientific requires you to not understand what they're doing, but be able to judge whether or not they're doing it well while still not understanding it.

"The buck stops here", on the president or the CEO's desk, but you can't actually seriously expect the President or CEO to understand nuclear physics. People spend their whole lives working to understanding nuclear physics. And the President or CEO would also have to understand the economy, structural engineering, everything else that comes across their desk.

As such, and this is something that has gotten me fired from so many jobs, CEOs and Presidents, leaders, *graders*, have used confidence as a proxy for accuracy. They cannot tell which of two disagreeing nuclear physicists is correct - they can easily tell which is more confident. This has always gotten me fired, because my first answer to any question is 'it's complicated', and 'it's never either/or, it's always both/and' is equally quick to come to my mouth. Look how much I've written so far, for God's sake, in an article that could have been a comment that could have been a like.

ChatGPT produces a confident answer for whatever you ask it to. Sometimes this results in hilarity, as with Elon's recent jury-rigging of Grok's system to demand that it respect his South African conspiracy theories. But as a general rule, ChatGPT can confidently argue for absolute bullshit, because ChatGPT is a machine designed to generate confident answers for things, not true answers for things.

I believe we will ultimately find this as destructive on intellectual life as the Maxim gun was on the battlefields of Europe.

Granted, I don't believe that the answer is some sort of Butlerian Jihad against all computer research. The only thing worse than a battlefield where both sides have the Maxim gun is a battlefield where only one side has the Maxim gun. It's inevitable, and unilateral disarmament is not how you leave an arms race. We just need to start training our graders to stop using confidence as a proxy for accuracy.

Considering that these 'masters of the universe' react badly to being told they're wrong, though, this is going to be a very painful and very long process.